Introducing Terraform-Operator: The Basics

Terraform-Operator is a Kubernetes CRD and Controller to configure, run, and manage your Terraform resources right from your cluster. Simply configure a Kubernetes manifest, apply, and watch Terraform-Operator run the Terraform. Once it's complete, it saves the Terraform output into a Kubernetes ConfigMap which can be consumed directly by your Pods. This makes it ideal to deploy along with Services which use cloud resources.

Deploy the Operator

-

Option #1 The easiest method to install the Terraform-Operator is via helm

$ helm repo add isaaguilar https://isaaguilar.github.io/helm-charts

$ helm install terraform-operator isaaguilar/terraform-operator --namespace tf-system --create-namespace

-

Option #2 Install it via

kubectlby first installing the CRD followed by the Controller.

$ git clone https://github.com/isaaguilar/terraform-operator

$ kubectl apply -f terraform-operator/deploy/crds/tf.isaaguilar.com_terraforms_crd.yaml

$ kubectl apply -f terraform-operator/deploy --namespace tf-system

Once the operator is installed, Terraform resources are ready to be deployed. However one critical component is missing; somewhere to store our Terraform State. The Terraform-Operator can be configured to store state to any Terraform backend. This is configured per resource. For now let's use the default; HashiCorp's Consul running in your cluster. Install it using the following command:

$ git clone --single-branch --branch v0.19.0 https://github.com/hashicorp/consul-helm.git

$ helm upgrade --install hashicorp ./consul-helm \

--namespace tf-system \

--set server.replicas=1 \

--set server.bootstrapExpect=1

Running Terraform

Now let's pick a simple example. Let's deploy a S3 bucket in AWS; we're going to need some AWS credentials. The Terraform-Operator can mount credentials from a Kubernetes Secret so let's create that:

$ kubectl create secret generic aws-session-credentials \

--from-literal=AWS_SECRET_ACCESS_KEY=${AWS_SECRET_ACCESS_KEY} \

--from-literal=AWS_ACCESS_KEY_ID=${AWS_ACCESS_KEY_ID}

Nice! The secret name aws-session-credentials is ready to be used in the configuration. Create the S3 bucket configuration as

# terraform-my-bucket.yaml

---

apiVersion: tf.isaaguilar.com/v1alpha1

kind: Terraform

metadata:

name: my-bucket

spec:

terraformVersion: 0.12.29

terraformModule:

address: https://github.com/cloudposse/terraform-aws-s3-bucket.git

applyOnCreate: true

applyOnUpdate: true

applyOnDelete: true

ignoreDelete: false # Make sure to clean up example and delete when kubectl delete is run

credentials:

- secretNameRef:

name: aws-session-credentials

env:

- name: AWS_REGION

value: us-west-2

- name: TF_VAR_name

value: terraform-operator-s3-bucket-example

Ready to deploy.

$ kubectl apply -f terraform-my-bucket.yaml

terraform.tf.isaaguilar.com/my-bucket created

After a small while you'll see your pod

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

my-bucket-nlv85 1/1 Running 1 39s

Run kubectl logs -f my-bucket-nlv85 to see the Terraform run logs. Once the pod completes, a ConfigMap with the Terraform output content is saved. Let's check out what we've got:

$ kubectl get configmap my-bucket-output -oyaml

apiVersion: v1

data:

access_key_id: ""

bucket_arn: arn:aws:s3:::terraform-operator-s3-bucket-example

bucket_domain_name: terraform-operator-s3-bucket-example.s3.amazonaws.com

bucket_id: terraform-operator-s3-bucket-example

bucket_region: us-west-2

bucket_regional_domain_name: terraform-operator-s3-bucket-example.s3.us-west-2.amazonaws.com

enabled: "true"

secret_access_key: ""

user_arn: ""

user_enabled: "false"

user_name: ""

user_unique_id: ""

kind: ConfigMap

metadata:

creationTimestamp: "2020-10-22T04:45:48Z"

name: my-bucket-output

namespace: default

resourceVersion: "416381"

selfLink: /api/v1/namespaces/default/configmaps/my-bucket-output

uid: 74a2e9d0-1421-11eb-bbe8-025000000001

Congratulations! The S3 bucket is ready to be used. I'll outline what went on in the Terraform-Operator to get to this point in another post. For now, let's explore more to see what we've got.

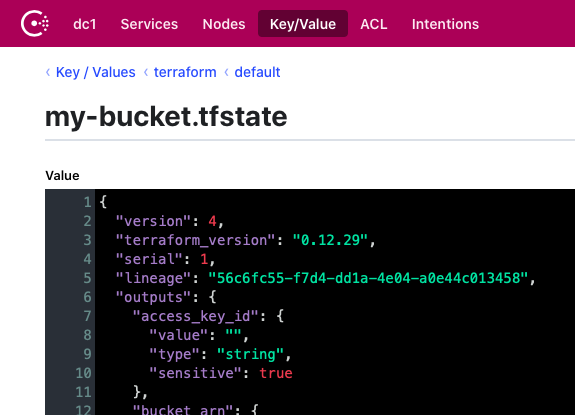

Terraform State with Consul

When using Terraform-Operator's default Terraform State backend, Consul, state is organized in the Key/Value data store as

$ kubectl port-forward -n tf-system svc/hashicorp-consul-ui 8000:80

Forwarding from 127.0.0.1:8000 -> 8500

Forwarding from [::1]:8000 -> 8500

See the Ui by going to localhost:8000 in a browser

Or query Consul's

$ curl "http://127.0.0.1:8000

Cleanup Terraform

To clean up the environment, run

$ kubectl delete terraform my-bucket

terraform.tf.isaaguilar.com "my-bucket" deleted

Summary

Terraform-Operator provides an organized structure to define your infrastructure-as-code with a first-class Kubernetes experience. It's easy to get setup and running in just a few minutes. Cleanup is a breeze. To learn more check out the official docs at https://github.com/isaaguilar/terraform-operator/tree/master/docs.

I would love to hear your feedback and expand on the project!

Send bugs, questions, feature requests for the Terraform-Operator to isaaguilar/terraform-operator by posting a new issue!